GPU calculation

Message boards : Number crunching : GPU calculation

| Author | Message |

|---|---|

Balmer BalmerSend message Joined: 2 Dec 05 Posts: 2 Credit: 96,879 RAC: 0 |

Hello Rosetta Team I'd like to see a GPU Client for Rosetta@home. GPU is MUCH, MUCH, MUCH, MUCH, MUCH, MUCH faster than CPU. THANK YOU Balmer Switzerland |

|

Mod.Sense Volunteer moderator Send message Joined: 22 Aug 06 Posts: 4018 Credit: 0 RAC: 0 |

Actually, GPUs are only MUCH, MUCH, MUCH, MUCH, MUCH, MUCH faster than CPU for certain types of work. Rosetta Moderator: Mod.Sense |

|

4LG5zSZM7uiF1nVGZVqTRrjkXA6i Send message Joined: 7 Mar 10 Posts: 14 Credit: 111,252,570 RAC: 0 |

Hello Rosetta Team Try building a computer with a GPU only and let us know how fast it is. If a GPU could do everything faster than a CPU, then Intel would be selling GPU's instead of CPU's. |

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

If a GPU could do everything faster than a CPU, then Intel would be selling GPU's instead of CPU's. Ehhh. https://www.digitaltrends.com/computing/intel-gpu-2020-launch/ |

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

|

Chilean ChileanSend message Joined: 16 Oct 05 Posts: 711 Credit: 26,694,507 RAC: 0 |

|

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

Rosetta "could" run on GPU if each GPU thread could handle a single model trajectory. But apparently each trajectory uses a lot of memory. The question is "how much" memory? In 2009 the top gpu had 2gb of Gddr3, now a top level gpu has 32gb of HBM2. But i don't know if is enough. |

|

Usuario1_S Send message Joined: 24 Mar 14 Posts: 92 Credit: 3,059,705 RAC: 0 |

Hello Rosetta Team Yes and well done projects like Folding@Home have both CPU+GPU clients, but it won't happen on this project because is a marely functioning mediocre one unfortunately, not enough developers or they don't know much what are they doing, Rosetta@Home is huge in RAM and Storage, it's not responsive, specially the Android client, unless you have a really fast SoC on your phone, have lots of WU shortages, etc, Android client looks like it's being developped but still WCG was always good at keeping the WUs relatively small and if not "light", responsive and not needing much RAM; so I think R@H is huge on RAM and Storage is because it's not well developed, probably never was, now must be a nightmare to do it, anyway, this happened on a Optical recognition of Cancer Cells in WCG, each WU took at least 6 hrs, up to 12, typically 8-10 hrs, so a guy came that new about the field of study and had experience in Distributed Computing and made a lot of optimizations, even the results were more precise, most WUs took 2 hr, so this doesn't happen here, probably never will, I have complained many times about different issues and how they don't even put enough WUs on the Queue, and all I got were empty promises that will never happen again, also made suggestion and they just never even said thank you. GPUs are basically massive parallel execution FPUs, so yes, they can calculate the 3D positions of protein molecules and even make some physics, for sure nVidia GPUs, but I think AMD ones too, so the people that are verbally attacking you because because they're covering for the ineptitude of this project, so I'd recommend going with Folding@Home if you have a recent enough GPU, it's also protein folding, so you'd be helping scientist with it. World Community Grid doesn't have GPU clients for most projects. Cheers. |

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

GPUs are basically massive parallel execution FPUs, so yes, they can calculate the 3D positions of protein molecules and even make some physics, for sure nVidia GPUs, but I think AMD ones too, so the people that are verbally attacking you because because they're covering for the ineptitude of this project, I don't think it's "ineptitude". There are some issues: - large use of memory for simulations - heterogeneity of simulations (ab initio, folding, docking, etc) - a lot of different people working on rosetta code, without a clear and unique standard for optimize the coding. A solution maybe to "fork" the code and use gpu for only one single kind of simulation (for example, "ab initio") and crunch other simulations still in cpus (like Gpugrid or Milkyway). But it's up to developers to decide. |

|

Usuario1_S Send message Joined: 24 Mar 14 Posts: 92 Credit: 3,059,705 RAC: 0 |

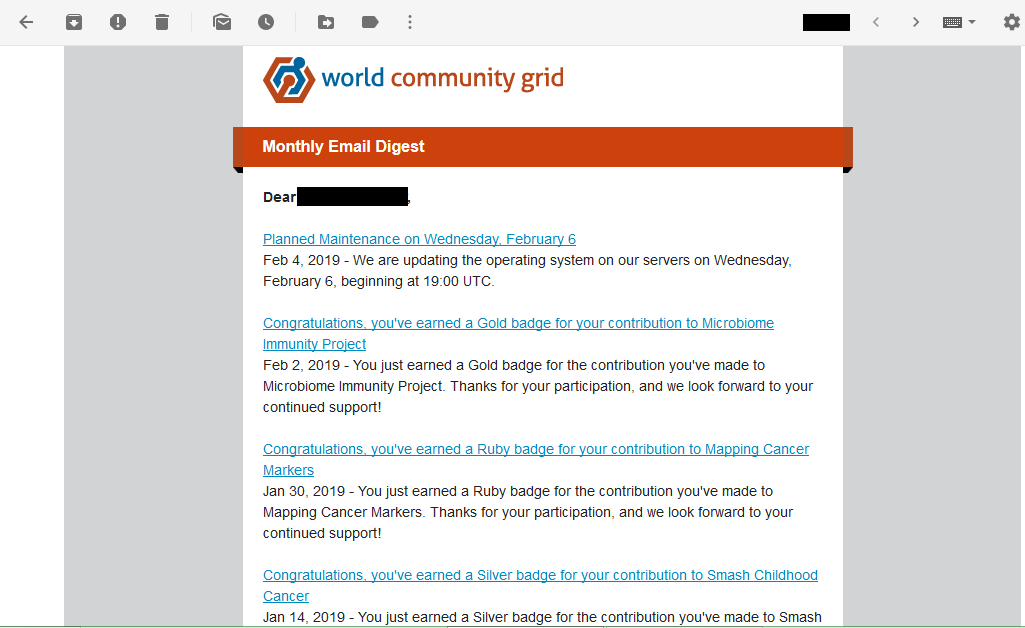

I think the team behaves inept, because of not enough developers, not coordinated enough internal organization, to optimize work on mobile devices, and/or not enough knowledge about programming and/or different computing hardware to do so. This e-mail I just got, not hard to implement, text and hyperlinks, informing of stuff, the University of Washington according to 1 member receives a good amount of money to do this , and they could go Wikipedia style and ask for donations in a mailing list, and pay well, I don't know 7K$ to a few developers to do what's left, they don't seem to care  |

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

I think the team behaves inept, because of not enough developers, If i'm not wrong, the RosettaCommons (the source of R@H) developers are about 200 people. But i don't know how many of these are dedicated exclusively to R@H code. This e-mail I just got, not hard to implement, text and hyperlinks, informing of stuff, the University of Washington according to 1 member receives a good amount of money to do this , and they could go Wikipedia style and ask for donations in a mailing list, and pay well, I don't know 7K$ to a few developers to do what's left, they don't seem to care I think money it's not a problem. From IPD Annual Report: 140+ scientists |

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

Some steps towards Gpu (and AI)?? Tensorflow in Rosetta Add ability to link Tensorflow's C libraries, to allow evaluation of feedforward neural networks on CPU or GPU. |

|

Jim1348 Send message Joined: 19 Jan 06 Posts: 881 Credit: 52,257,545 RAC: 0 |

Some steps towards Gpu (and AI)?? On GPUGrid, we run the Quantum Chemistry app in BOINC (on CPUs) to train their GPUs, which they run in-house. I have been wondering whether Rosetta might do something like that. It does not necessarily mean that we will be running the GPU app ourselves. But if so, I will be glad to supply one (or more). |

|

sgaboinc Send message Joined: 2 Apr 14 Posts: 282 Credit: 208,966 RAC: 0 |

gpu use may need redesign of the code base a very expensive (in terms of man-effort) task and makes it 'brittle' i.e. researchers and developers may find it much harder to do new protein algorithms the insight comes from deep learning, which use a lot of cuda codes tensorflow and caffe (pytorch) are built and designed around cuda codes today if you want to run tensorflow or caffe on amd gpus you find that you are *stuck* with nvidia the alternative gpu codes 'doesn't exist' especially for the complicated neural net layers and models even for that matter switching to a 'cpu only' build is difficult as there really isn't a easy way to translate all that cuda codes back to a cpu based one my guess is the same would happen should rosetta choose to go the gpu route but nevertheless it is true gpu could optimise and speed up hundreds of times apps designed that way say vs a conventional cpu. it is a tradeoff of sorts and a cost - benefit tradeoff most people has at least a cpu a pc that is and many are running a recent haswell, skylake, kabylake, ryzen and later cpu hence there would be more people who can participate in cpu projects gpu runs *hot* *very hot* burns power like aluminium smelters a nvidia 1070 gt easily burns upwards of 150 watts and higher end models burn even more say 200 - 300 watts, that would need a large power supply and it would be necessary to run it in an environment that can remove all that heat gpu are the right hardware for the right apps and they don't normally fall into the rather 'small' apps scenarios e.g. tensorflow and caffe (pytorch) deep learning on those networks in the wild eats 8 - 12 GB of gpu memory and siphon 10s to 100s of gigabytes of samples running full power on the gpu for like 12-48 hours to train those super deep convolution neural networks most (average) people i doubt are willing to commit that much high energy resources to run boinc jobs, but yes apps optimised to run on gpu do so pretty much like how those vector supercomputer works and supercomputers normally use gpus as well these days and i think it is mainly due to considerations of costs but vector apps are different from normal apps and vector apps are brittle to maintain, after you build that vector app, it won't be that easy to port to a different vector platform and it could also be hard to finally make it 'run on cpu' again |

|

sgaboinc Send message Joined: 2 Apr 14 Posts: 282 Credit: 208,966 RAC: 0 |

i'd say if rosetta has particular jobs that runs on gpu e.g. those tensorflow, pytorch deep learning based jobs that runs on *nvidia* cuda optimised codes could possibly have separate tasks for boinc volunteers having those high end hardware willing to run those specific jobs a 'low end' gpu probably won't help much for very specific tasks that says needs to process 500 GB volume of data burning say 150-200 watts say 24-48 hours on a nvidia 1080 TI or higher gpu to train real 'in the wild' neural networks and if the training dataset is huge think 100 GB, volunteers may need to offer to download or get them via 'alternative' channels |

|

sgaboinc Send message Joined: 2 Apr 14 Posts: 282 Credit: 208,966 RAC: 0 |

i remembered once there is a 'innovative' (smart) researcher who distributes huge say a few hundred megs databases and smaller sets of molecules / proteins. and most of those jobs *ends with errors* shortly after downloading hundreds of megs and running the jobs e.g. download 200 megs run for a couple of seconds , abort - wrong model i think some the gpu scenarios may *mimic* some such scenarios, i.e. those are huge network bandwidth volume data guzzlers think in excess of 100 GB perhaps that is borrowed from the 'infinite monkey theorem' https://en.wikipedia.org/wiki/Infinite_monkey_theorem "an infinite number of monkies hitting keys at random on a typewriter keyboard for an infinite amount of time will almost surely type any given text, such as the complete works of William Shakespeare" (substitute monkies for cuda cores, perhaps worse than embarrassingly parallel ) how do you win the lottery? buy every permutation |

|

sgaboinc Send message Joined: 2 Apr 14 Posts: 282 Credit: 208,966 RAC: 0 |

- duplicate - |

|

sgaboinc Send message Joined: 2 Apr 14 Posts: 282 Credit: 208,966 RAC: 0 |

- duplicate - |

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2137 Credit: 12,507,890 RAC: 760 |

gpu use may need redesign of the code base a very expensive (in terms of man-effort) task and makes it 'brittle' I'm agree with all your consideration. Not all code is "gpu-able". But EVERY single step like gpgpu (with Cuda/Opencl), or AI (Tensorflow/Torch), or CNN or "simple" cpu optimization (SSE/Avx) that accelerate the science is welcome!! |

|

sgaboinc Send message Joined: 2 Apr 14 Posts: 282 Credit: 208,966 RAC: 0 |

gpu use may need redesign of the code base a very expensive (in terms of man-effort) task and makes it 'brittle' i'd think the 'gpu intensive ones can literally be a different 'class' of apps. e.g. Tensorflow and or Caffe/Torch and those apps generates the cpu based algorithms optimizations for rosetta@home itself. i.e. Tensorflow and or Caffe/Torch does all the deep learning data mining and generate likely folds and r@h explore the folds and confirm the folds for unknown molecules / proteins of course the catch with this approach is that instead of exploring a comprehensive sub space, a subset of that is explored the question will always be that if you only test 10 in 100 million possible and likely combinations what makes you think that 10 in 100 million hits the lottery but that is the efficacy of deep learning, it is based on the tightest statistics rather than comprehensive problem space exploration it is based on the unreasonable effectiveness of data https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/35179.pdf and r@h can benefit from Tensorflow and or Caffe/Torch cuda based jobs. the data files are likely to be *massive* hence volunteers would likely need to download them separately. and *high end* GPUs are required e.g. at nvidia 1070 and higher or better rtx 2070 and higher with no less than 8 GB ram on the GPU and those jobs could possibly run at full power for 24-48 hours (or more) burning 150-300 watts for all that time |

Message boards :

Number crunching :

GPU calculation

©2025 University of Washington

https://www.bakerlab.org